Luigi La Corte

At the dawn of the cloud revolution, which saw enterprises move their data from on premise to the cloud, Amazon, Google and Microsoft succeeded at least in part because of their attention to security as a fundamental concern. No large-scale customers would even consider working with a cloud company that wasn’t SOC2 certified.

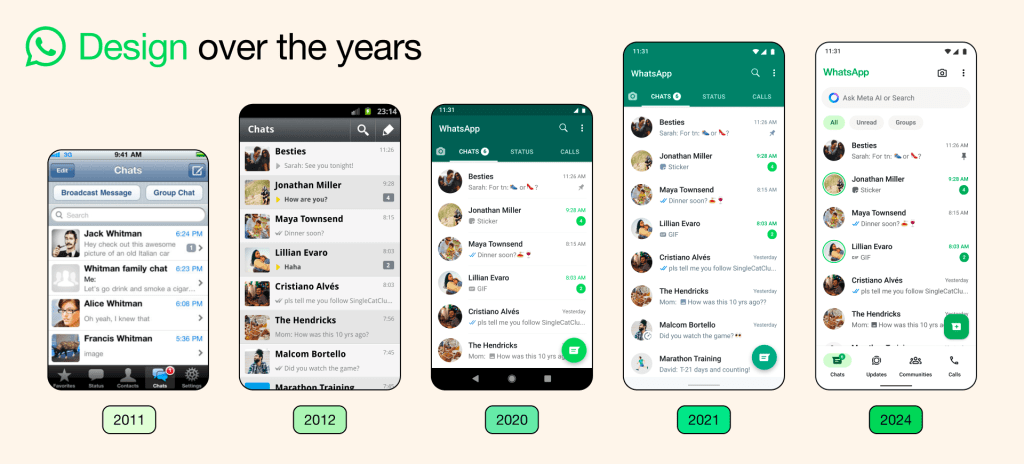

Today, another generational transformation is taking place, with 65% of workers already saying they use AI on a daily basis. Large language models (LLMs) such as ChatGPT will likely upend business in the same way cloud computing and SaaS subscription models did once before.

Yet again, with this nascent technology comes well-earned skepticism. LLMs risk “hallucinating” fabricated information, sharing real information incorrectly, and retaining sensitive company information fed to it by uninformed employees.

Any industry that LLM touches will require an enormous level of trust between aspiring service providers and their B2B clients, who are ultimately those bearing the risk of poor performance. They’ll want to peer into your reputation, data integrity, security, and certifications. Providers that take active steps to reduce the potential for LLM “randomness” and build the most trust will be outsized winners.

For now, there are no regulating bodies that can give you a “trustworthy” stamp of approval to show off to potential clients. However, here are ways your generative AI organization can build as an open book and thus build trust with potential customers.

Seek certifications where you can and support regulations

Although there are currently no specific certifications around data security in generative AI, it will only help your credibility to obtain as many adjacent certifications as possible, like SOC2 compliance, the ISO/IEC 27001 standard, and GDPR (General Data Protection Regulation) certification.

You also want to be up-to-date on any data privacy regulations, which differ regionally. For example, when Meta recently released its Twitter competitor Threads, it was barred from launching in the EU due to concerns over the legality of its data tracking and profiling practices.

As you’re forging a brand-new path in an emerging niche, you may also be in a position to help form regulations. Unlike Big Tech advancements of the past, organizations like the FTC are moving far more quickly to investigate the safety of generative AI platforms.

While you may not be shaking hands with global heads of state like Sam Altman, consider reaching out to local politicians and committee members to offer your expertise and collaboration. By demonstrating your willingness to create guardrails, you’re indicating you only want the best for those you intend to serve.

Set your own safety benchmarks and publish your journey

In the absence of official regulations, you should be setting your own benchmarks for safety. Create a roadmap with milestones that you consider proof of trustworthiness. This may include things like setting up a quality assurance framework, achieving a certain level of encryption, or running a number of tests.

As you achieve these milestones, share them! Draw potential customers’ attention to these attempts at self-regulation through white papers and articles. By showing that safety achievements are front of mind, you’re establishing your own credibility.

You’ll also want to be open about which LLMs or APIs you’re using, as this will enable others to get a fuller understanding of how your technology functions and establishes greater trust.

When possible, open source your testing plan/results. Provide highly detailed test cases, with a simple framework composed of questions, answers, and ratings for each against a benchmark.

Open sourcing parts of your process will only build trust with your user base, and they’ll likely ask to see examples during procurement.

Back up the data integrity of your product

Liability is a complicated issue. Let’s take the example of risk in the construction industry. Construction firms can outsource risk management to lawyers — which enables the company to hold that third party accountable if something goes wrong.

But if you, as a new provider, offer AI tools that can replace a legal advisor for a 10x–100x lower price, the likely trade-off is that you’ll absorb far less liability. So the next best thing you can offer is integrity.

We think that integrity will look like an auditable quality assurance process that potential customers can peer into. Users should know which outputs are currently “in distribution” (i.e., which outputs your product can provide reliably), and which aren’t. They should also be able to audit the output from tests in order to build confidence in your product. Enabling prospective customers to do so puts you ahead of the curve.

Along those lines, AI providers will need to start explaining data integrity as a new “leave-behind” pillar. In traditional B2B SaaS, businesses address common questions such as “security” or “pricing” with leave-behind materials like digital pamphlets.

Providers will now have to start doing the same with data integrity, diving into why and how they can promise “no hallucination,” “no bias,” edge case tested, and so on. They will always need to backstop these claims with quality assurance.

(As an aside, we’ll likely also see underwriters creating policies for agents’ errors and omissions, once they proliferate.)

Stress test your product until your error rate is acceptable

It may be impossible to guarantee that a platform never makes mistakes when it comes to LLMs, but you’ve got to do whatever it takes to bring your error rate down as low as possible. Vertical AI solutions will benefit from tighter, more focused feedback loops, ideally using a steady stream of preliminary usage data, that will propel them to decrease error rate over time.

In some industries, the margin for error may be more flexible than others — think caricature generators versus code generators.

But the honest answer is that the error rate the client accepts (with eyes wide open) is a good one. For certain cases, you want to reduce false negatives, in others, false positives. Error will need to be scrutinized more closely than with a single number (e.g., “99% accurate”). If I were a buyer, I would instead ask:

- “What’s your F1 score?”

- “When designing, what type of error did you index on? Why?”

- “In a balanced dataset, what would your error rate be for labeling data?”

These questions will really uncover the seriousness of a provider’s iteration process.

An absence of regulation and guidelines does not mean that customers are naive when examining your level of risk as an AI provider. A prudent customer will demand that any company prove that their product can perform within an acceptable error rate, and show respect for robust safeguards. The ones that don’t will surely lose.

Comment