- Community Home

- >

- Storage

- >

- Around the Storage Block

- >

- Data center transformation: The road to 400Gbps an...

Categories

Company

Local Language

Forums

Discussions

Forums

- Data Protection and Retention

- Entry Storage Systems

- Legacy

- Midrange and Enterprise Storage

- Storage Networking

- HPE Nimble Storage

Discussions

Discussions

Discussions

Forums

Discussions

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

- BladeSystem Infrastructure and Application Solutions

- Appliance Servers

- Alpha Servers

- BackOffice Products

- Internet Products

- HPE 9000 and HPE e3000 Servers

- Networking

- Netservers

- Secure OS Software for Linux

- Server Management (Insight Manager 7)

- Windows Server 2003

- Operating System - Tru64 Unix

- ProLiant Deployment and Provisioning

- Linux-Based Community / Regional

- Microsoft System Center Integration

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Discussion Boards

Community

Resources

Forums

Blogs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Receive email notifications

- Printer Friendly Page

- Report Inappropriate Content

Data center transformation: The road to 400Gbps and beyond

With an increase in the number of mission-critical workloads running on denser and faster datacenter infrastructures, there is an increased need for speed and efficiency from high performance networking infrastructure.

When we take a look at the data that’s generated at this unpreceded volume and velocity, we see that there is an increased need for managing and analyzing the data in order to draw actionable insights that will benefit the business or organization. That is only possible if we can correlate the historical stored data with near-real-time data. The enhanced customer experience is enabled for latency-sensitive applications with predictive analytics.

What is driving the need for increased bandwidth?

If we look at the need for increased bandwidth, we’ll find growing densities within virtualized servers that have evolved on north-south and east-west data-centric traffic. The massive shift in machine-to-machine traffic has resulted in a major increase in required network bandwidth to accommodate demand. The arrival of faster storage in the form of solid state devices such as Flash and NVMe is also having a similar effect.

We find the need for increased bandwidth all around us as our lives are increasingly intertwined with technology. A leading driver in this evolution is artificial intelligence (AI) workloads, which spin off volumes of data to solve complex computations, and require fast, efficient data delivery for a vast amount of data sets.

Deploying networks at speeds of up to 100Gbps – and in the near future at 400Gbps – helps reduce necessary training times. The use of lightweight protocols such as RDMA (Remote Direct Memory Access) can further help to complete the rapid exchange of data between computing nodes, while streamlining the communication and delivery process. Think about it. It was only a few years ago when a majority of data centers started deploying 10GbE in volume. And now, we are seeing a shift toward 25 and 100GbE, with the adoption of 400GbE answering the call for emerging bandwidth concerns.

Transforming the Ethernet switch market

It is no wonder then, that the Ethernet switch market is undergoing a transformation. Previously, Ethernet switching infrastructure growth was led by 10/40GbE, but volume demanded that the tide start to turn in favor of 25 and 100GbE.

Analysts agree that soon 25 and 100Gb, as well as emerging 400Gb Ethernet speeds, are expected to surpass all other Ethernet solutions as the most deployed Ethernet bandwidths. This trend is being driven by mounting demands for host-side bandwidth as data center densities increase, and pressure grows for switching capacities to keep pace. More than just bandwidth, 100 and 400Gbps technology is helping to drive better cost efficiencies in capital and operating expenses, as compared to legacy connectivity infrastructure at 10/40Gbps. These increased bandwidths also enable greater reliability and lower power requirements for optimal data center efficiency and scalability.

Data center L2/L3 switching market snapshot

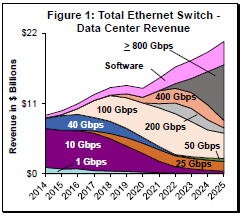

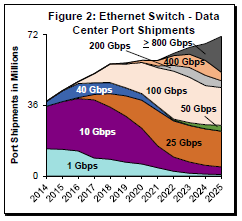

According to Dell’Oro, the data center Ethernet switching market revenue has grown to approximately $14B in 2021. It’s expected to experience a healthy growth rate of 9% CAGR – to approximately $20B by 2025. Overall total port shipments are also expected to experience significant, similar growth.

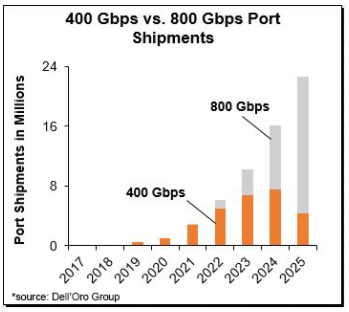

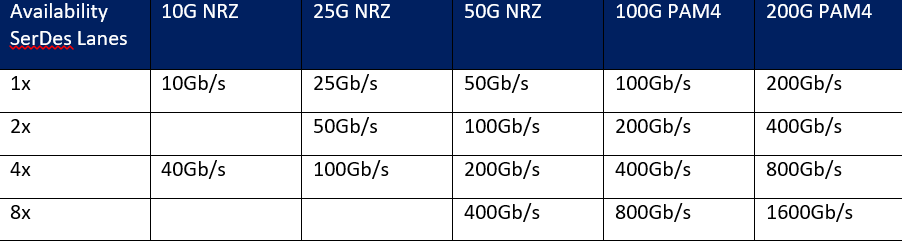

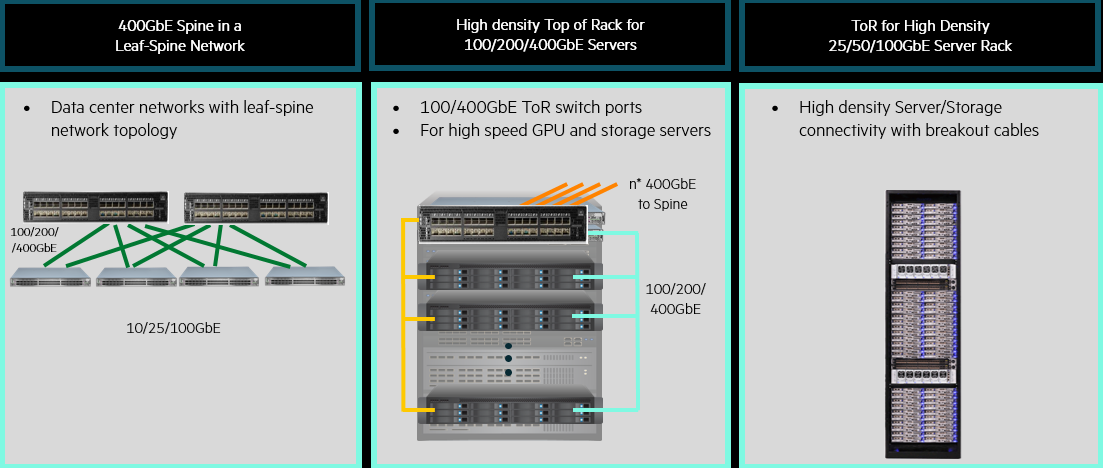

Breakout cabling provides scalability options while enabling a single 200GbE split into two 100GbE links. Likewise, any 100GbE port on a switch can breakout to four 25GbE ports linking one switch port, and accommodating up to four adapter cards in servers, storage or other subsystems. Similarly, a 400 Gbps port can be configured as 4x100GbE, 2x200GbE, or 1x400GbE. In the future with 800GbE systems, we can expect deployments to include scale option at lower speeds with breakouts to 2x400GbE or 8x100GbE. Breakout applications support many use cases, including aggregation, shuffle, better fault tolerance, and larger radix.

We have already experienced the market transition from 10/40GbE-based systems to 25/100GbE-based systems and in the next couple of years we anticipate much wider adaptation for 400Gbps systems by Enterprises. 400Gbps is expected to be less expensive when compared to 100Gbps on bandwidth bases. 400Gbps adoption is going to offer higher bandwidth when compared to 100Gbps systems, while at the same time it brings higher radix, lower latency, and fewer hops.

What will the future bring? The data center Ethernet switch market is expected to transition through three-to four major speed upgrade cycles during the next five years. The first upgrade cycle was driven by 25G SerDes technology. The second cycle was powered by 50G SerDes Technology. The third cycle, which is projected to start in the very near future, will be propelled by 100G SerDes technology. A fourth upgrade cycle, resulting from 200 G SerDes, is also expected at a later phase.

Transformation in data center architectures: Is edge eating the cloud?

There are many factors that drive the need for the evolving architecture of the modern data centers. According to Gartner, a vast majority of data will be processed outside traditional data centers by 2025. We’ll start to see modern architectures for data centers that are designed with a combination of edge, centralized data centers, and the cloud. There are exciting, transformational developments in how data is managed in this new ecosystem.

On the flip side, this transformation causes some IT managers to worry that edge is eating the cloud.

On the contrary, a balance is going to have to happen between edge, data center and cloud, while leveraging the strengths of each to maintain well-orchestrated distributed workloads. Each will have its own workload balance, depending on the type of application. In most cases, this orchestration will work in sync with distributed architectures, depending on application’s requirements. Based on workload and application needs, every organization is going to need to find the point of equilibrium for the best mix of edge, data center and cloud.

With all available connectivity options of 10/25GbE, 100GbE, 200GbE, 400GbE, we’ll start to see and use the best option based on application needs. For example, many edge locations may still continue to use 25/100GbE; central data centers will start to leverage 100/200/400GbE, depending on the bandwidth and latency the application needs.

Edge computing: Processing data closer to point of creation

Edge computing allows data from the devices to be analyzed at the edge before being sent to the data center. Using intelligent edge technology can help maximize a business’s efficiency. Instead of sending data out to a central data center, analysis is performed at the location the data is generated. Micro data centers at the edge integrate storage, compute and networking to deliver the speed and agility needed for processing the data closer to where it is created. For applications requiring high performance infrastructure with low-latency while using edge computing, there is no need to make tradeoffs between high-bandwidth and ultra-low-latency; both are possible at the local area network at the edge.

The combination of edge and central data center plus cloud brings multiple benefits to an organization or enterprise.

- It reduces the load on the network to minimize network congestion

- It improves the reliability of the network by distributing and load balancing between edge and central data center location(s)

- It enhances the customer experience for latency-sensitive applications

- It reduces the total cost of ownership (TCO) by optimizing the infrastructure hosted at the central location with lower-cost edge infrastructure.

Clearly, the network will need to support more feature sets to accommodate the new requirements of digital transformation. Edge computing and IoT will power the need for security, automation, and AI/ML.

Designing for today while planning for the future

Most modern data centers are highly virtualized and running on solid state storage – whether or not you are supporting AI/ML or DA workloads today. For both fast NVMe storage and all computational inferencing workloads, predictable and reliable data delivery depends on fast and accurate data delivery – and this starts at the network.

Rapid improvement of data center computing with low-latency storage solutions have transferred data center performance bottlenecks to the network. Today’s data centers should be designed to handle this anticipated bandwidth with a low latency, lossless Ethernet fabric, while leveraging the new connectivity solutions up to 400Gbps. Data center switching needs to deliver ultra-low-latency, zero packet loss (no avoidable packet loss, for example due to traffic microbursts), and deliver that performance fairly and consistently across any packet size, mix of port speeds, or combination of ports.

For more information, please check out these blogs.

- High-performance, low-latency networks for edge and co-location data centers

- Need for speed and efficiency from high performance networks for Artificial Intelligence

Follow Faisal on Twitter @ffhanif .

Storage Experts

Hewlett Packard Enterprise

twitter.com/HPE_Storage

linkedin.com/showcase/hpestorage/

hpe.com/storage

- Back to Blog

- Newer Article

- Older Article

- haniff on: High-performance, low-latency networks for edge an...

- StorageExperts on: Configure vSphere Metro Storage Cluster with HPE N...

- haniff on: Need for speed and efficiency from high performanc...

- haniff on: Efficient networking for HPE’s Alletra cloud-nativ...

- CalvinZito on: What’s new in HPE SimpliVity 4.1.0

- MichaelMattsson on: HPE CSI Driver for Kubernetes v1.4.0 with expanded...

- StorageExperts on: HPE Nimble Storage dHCI Intelligent 1-Click Update...

- ORielly on: Power Loss at the Edge? Protect Your Data with New...

- viraj h on: HPE Primera Storage celebrates one year!

- Ron Dharma on: Introducing Language Bindings for HPE SimpliVity R...